Data for pipeline building – Pipelines using TensorFlow Extended

For this exercise data is downloaded from Kaggle (link is provided below) and the dataset is listed under CC0:Public domain licenses. The data contains various measurements from EEG and the state of the eye is captured via camera. 1 indicates closed eye and 0 indicates open eye.

https://www.kaggle.com/datasets/robikscube/eye-state-classification-eeg-dataset

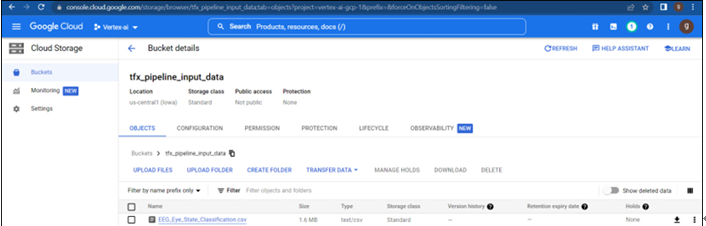

tfx_pipeline_input_data bucket is created under us-centra1 (single region) and csv file is uploaded from to the bucket as shown in Figure 8.2:

Figure 8.2: Data in GCS for pipeline construction

Pipeline code walk through

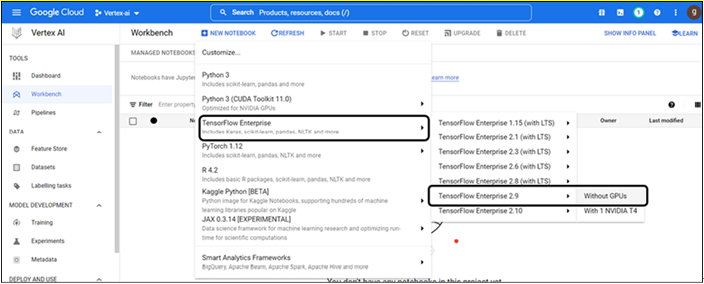

Workbench needs to be created for run the pipeline code. Follow the steps mentioned in the chapter Vertex AI workbench & custom model training., for creation of the workbench (choose TensorFlow enterprise | TensorFlow Enterprise 2.9 | Without GPUs, refer Figure 8.3 for reference. All other steps will be the same as mentioned in the Vertex AI workbench and custom model training)

Figure 8.3: Workbench creation using TensorFlow enterprise

Step 1: Create Python notebook file

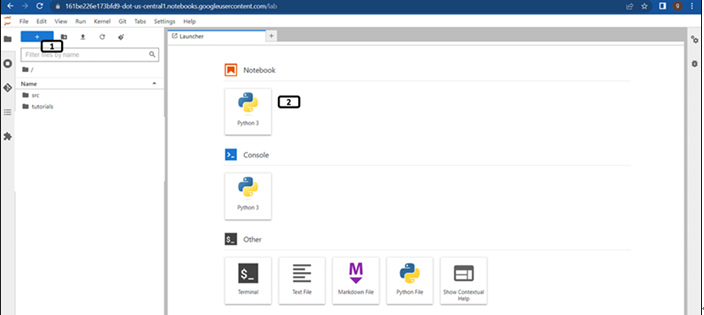

Once the workbench is created, open Jupyterlab and follow the steps mentioned in Figure 8.4 to create Python notebook file:

Figure 8.4: New launcher window

- Click New launcher.

- Double click on the Python3 Notebook file to create one.

Step 2 onwards, run the following codes in separate cells.

Step 2: Package installation

Run the following commands to install the Kubeflow, google cloud pipeline and google cloud aiplatform package. (It will take few minutes to install the packages):

USER_FLAG = “–user”

!pip install {USER_FLAG} –upgrade “tfx[kfp]<2”

!pip install {USER_FLAG} apache-beam[interactive]

!pip install python-snappy

Step 3: Kernel restart

Type the following commands in the next cell, to restart the kernel. (Users can restart kernel from the GUI as well):

import os

import IPython

if not os.getenv(“”):

IPython.Application.instance().kernel.do_shutdown(True)

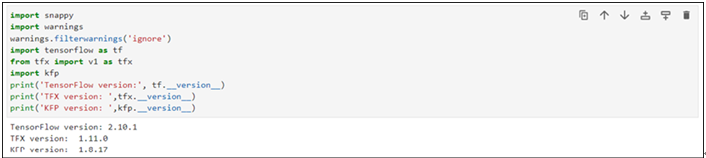

Step 4: Verify packages are installed

Run the following mentioned lines of code to check if the packages are installed (if the packages are installed properly try upgrading the pip package before installing tfx and kfp packages):

import snappy

import warnings

warnings.filterwarnings(‘ignore’)

import tensorflow as tf

from tfx import v1 as tfx

import kfp

print(‘TensorFlow version:’, tf.version)

print(‘TFX version: ‘,tfx.version)

print(‘KFP version: ‘,kfp.version)

If the packages are installed properly, you should see the versions of TensorFlow, TensorFlow extended and Kubeflow package versions as shown in Figure 8.5:

Figure 8.5: Packages installed successfully

Step 5: Setting up the project and other variables

Run the following mentioned line of codes in a new cell to set the project to the current one, also define variables to store the path for multiple purpose:

PROJECT_ID=”vertex-ai-gcp-1”

!gcloud config set project {PROJECT_ID}

BUCKET_NAME=”tfx_pipeline_demo”

NAME_PIPELINE = “tfx-pipeline”

ROOT_PIPELINE = f’gs://{BUCKET_NAME}/root/{NAME_PIPELINE}’

MODULE_FOLDER = f’gs://{BUCKET_NAME}/module/{NAME_PIPELINE}’

OUTPUT_MODEL_DIR=f’gs://{BUCKET_NAME}/output_model/{NAME_PIPELINE}’

INPUT_DATA_DIR = ‘gs://tfx_pipeline_input_data’

ROOT_PIPELINE is used to store the artifacts of the pipeline, MODULE_FOLDER is used to store the .py file for the trainer component, OUTPUT_MODEL_DIR is used to store the trained model and INPUT_DATA_DIR is the GCS location where input data is located.