Limitations of Explainable AI – Explainable AI

Following are some of the limitations of Explainable AI in Vertex AI:

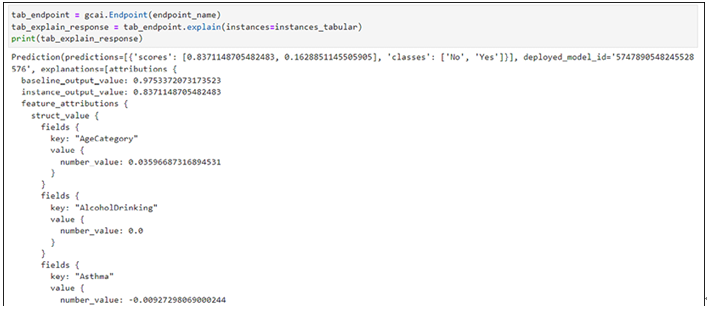

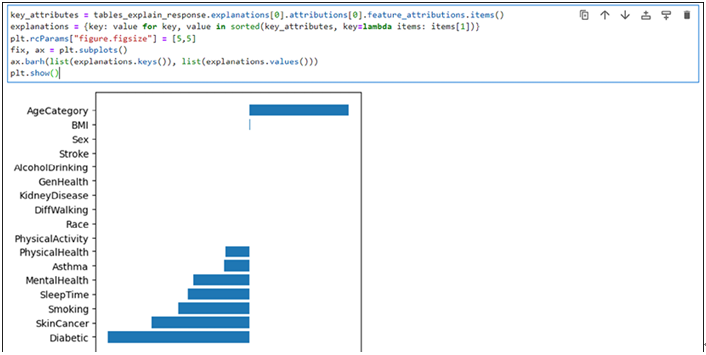

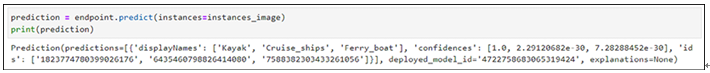

- Each attribution merely displays how much the attribute influenced the forecast for that case. A single attribution may not represent model behavior. Aggregate attributions is preferred over a dataset to understand approximate model behavior.

- Model and data determine attributions. They can only show the model’s data patterns, not any underlying linkages. The target’s association with a feature does not depend on its strong attribution. The attribution indicates if the model predicts using the characteristic.

- Attributions alone cannot determine quality of the model; it is recommended to consider assessment of the training data and evaluation metrics of the model.

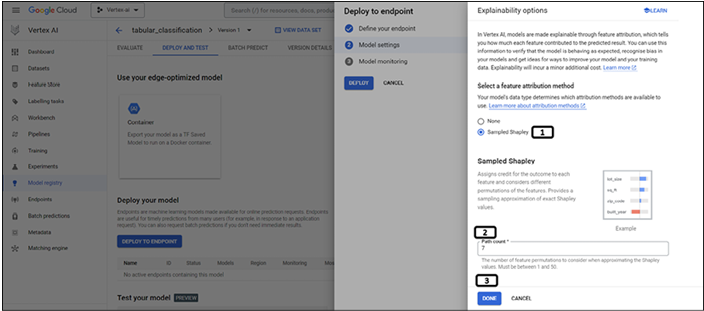

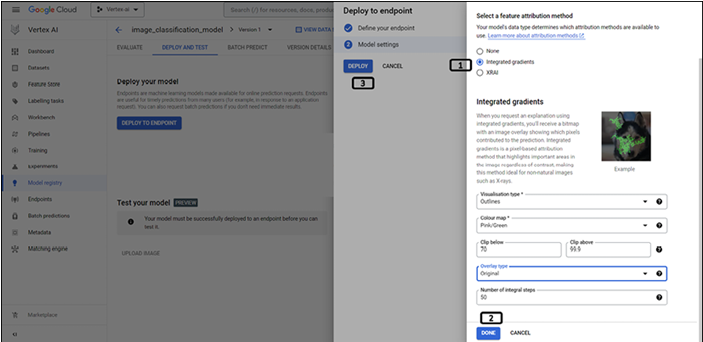

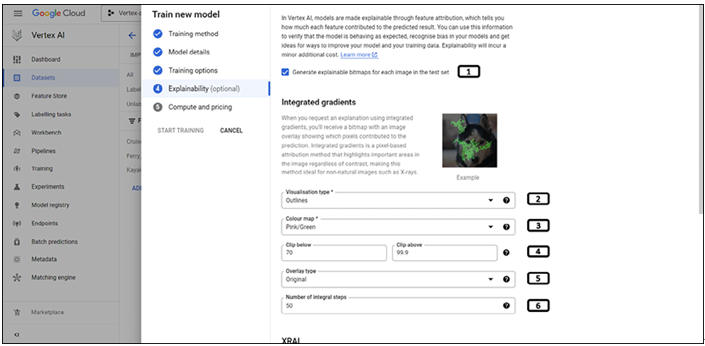

- Integrated gradients method works well for the differentiable models (where derivative of all the operations can be calculated in TensorFlow graph). Shapley method is used for the Non-differentiable models (non-differentiable operations in the TensorFlow network, such as rounding operations and decoding).

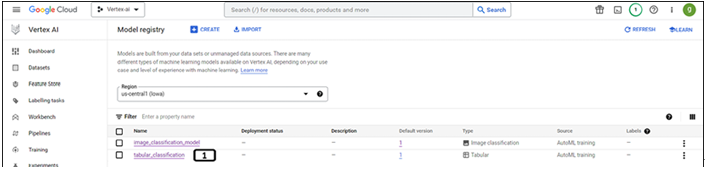

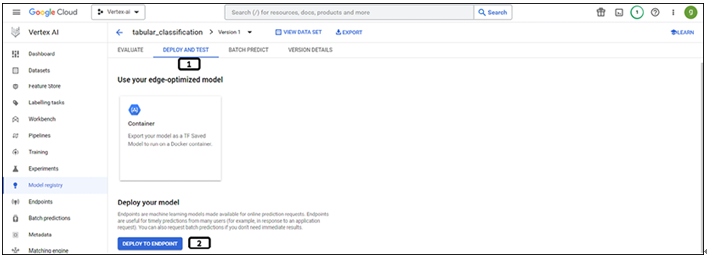

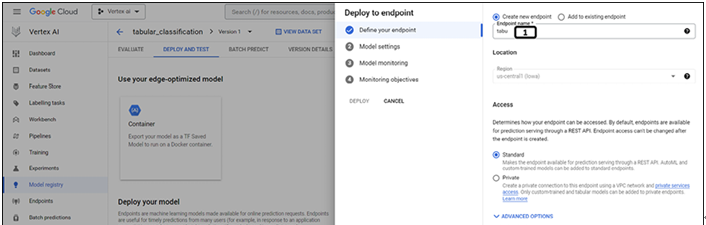

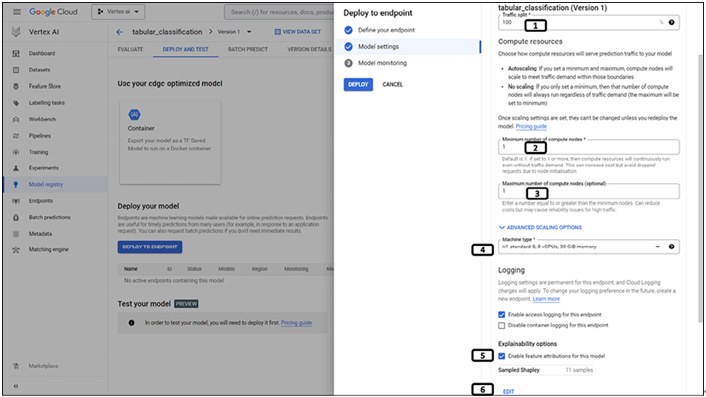

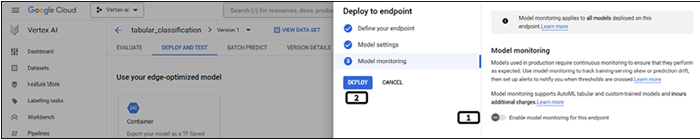

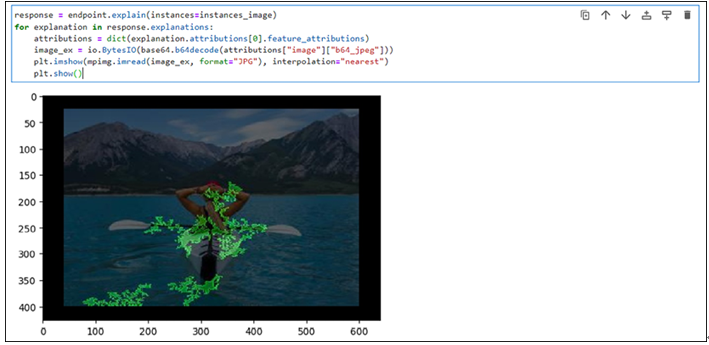

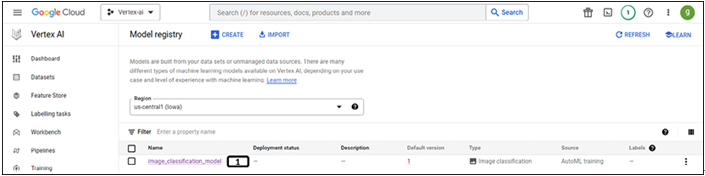

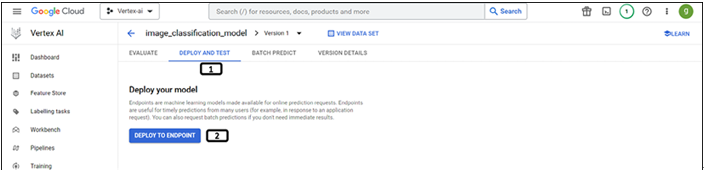

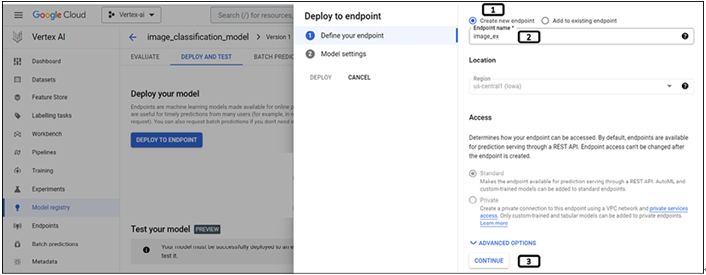

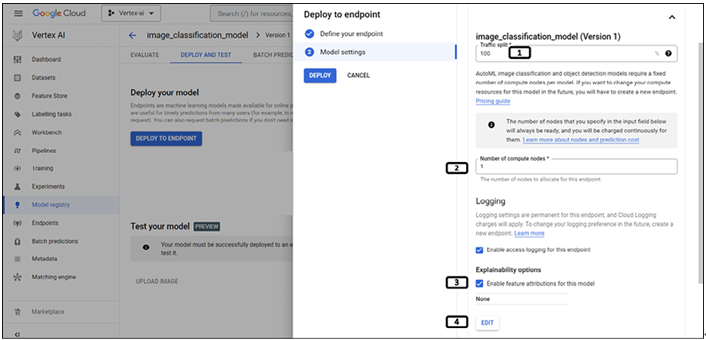

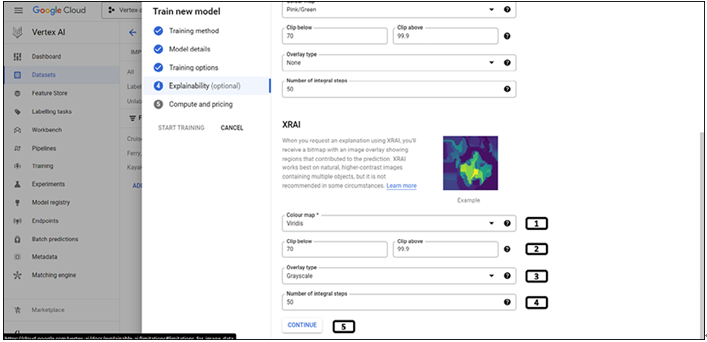

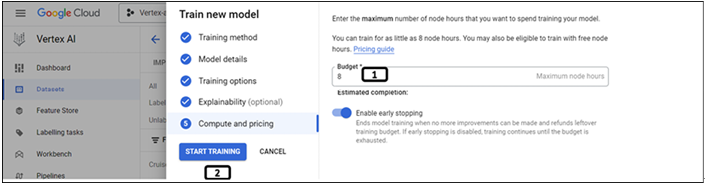

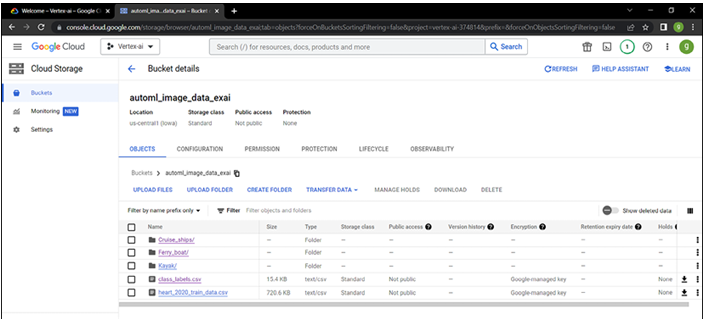

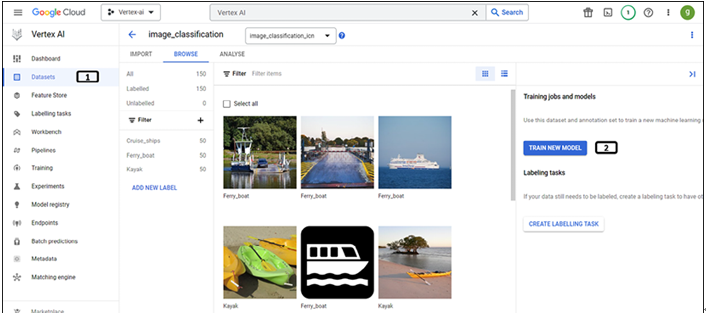

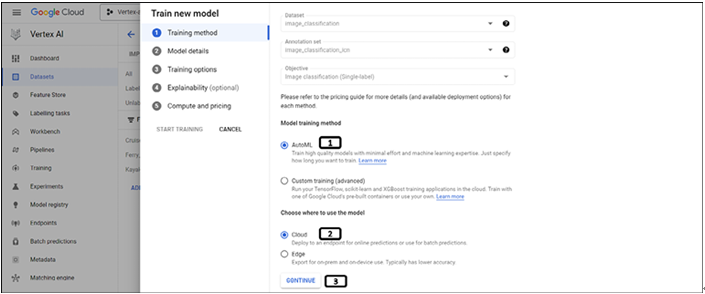

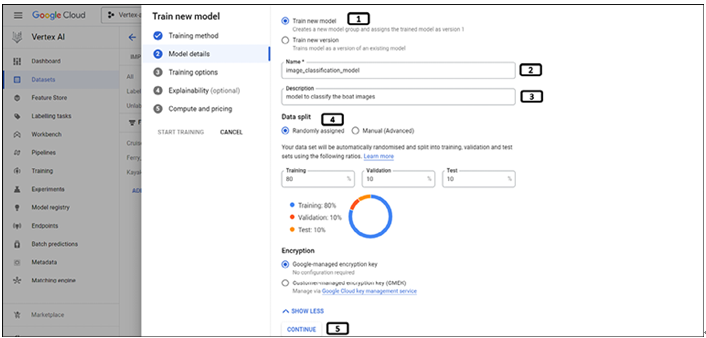

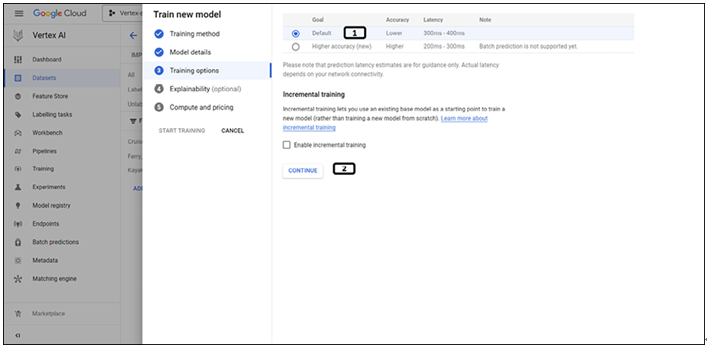

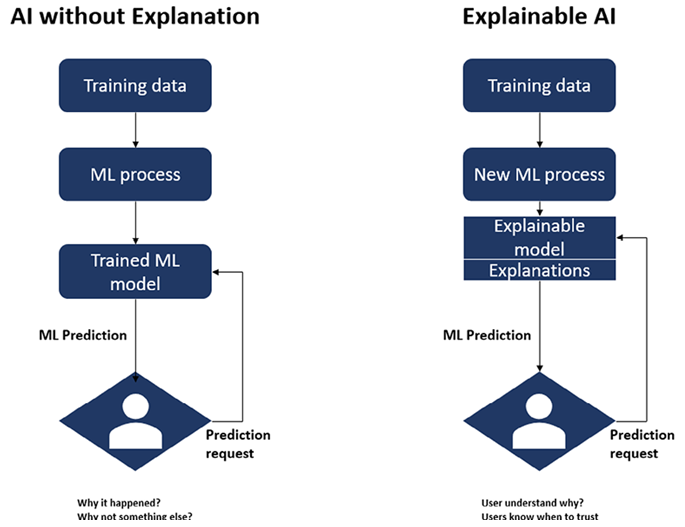

In this book, we started by understanding the cloud platform, a few important components of the cloud, and the advantages of the cloud platforms. We started working development of the machine learning models through Vertex AI AutoML for tabular, text, and image data, we deployed the trained models onto the endpoints for the online predictions. Even before entering into the complexity of the custom model building, we worked to understand how to leverage pre-build models of the platform to obtain predictions. For the custom models, we utilized a workbench for the code development for the model training and utilized docker images to submit the training jobs, also worked on the hyperparameter tuning to further enhance the model performance using Vizier. We worked on the pipeline components of the platform to train the model and evaluate and deploy the model for online predictions using both Kubeflow and TFX. We worked on creating a centralized repository for the features using the feature store of the Vertex AI. This is the last chapter of the book, where we learned about the explainable AI, need of it. We trained the AutoML classification model for image and tabular data for the explanations and obtained the explanations using the Python code. GCP is adding lot of new components and features to enhance its capability, check the platform (documentation of the platform) regularly to keep yourself updated.

- Why explainable AI is important?

- What are the different types of explanations supported by Vertex AI?

- What is the difference between example based and feature based examples?